rqlite is a lightweight, open-source, distributed relational database written in Go, with SQLite as its storage engine. 5.10.0 is now out, capping a series of releases that brings significant improvements in disk usage and startup times.

is a lightweight, open-source, distributed relational database written in Go, with SQLite as its storage engine. 5.10.0 is now out, capping a series of releases that brings significant improvements in disk usage and startup times.

Since the release of 5.6.0 there are have been a number of distinct improvements, all aimed at reducing the amount of data written to disk. Why focus on disk usage? First because it’s long been obvious that disk IO is the performance bottleneck for rqlite, due to fsync’ing to the Raft log. Secondly, rqlite aims to be a lightweight database, so keeping its disk footprint low is desirable. 5.10.0 now contains the following changes:

- Protocol Buffer (Protobuf) encoding of the SQL commands before they are written to the Raft log. Generally speaking protofbuf encoding is more space-efficient than JSON, which is what was used in 5.6.0.

- Compression of SQL queries (and batches of SQL queries) above a certain threshold, which happens before the Protobuf-encoding, and before the commands are written to the Raft log. The savings from compression are greater the larger the SQL queries sent to rqlite. This also has the nice affect of reducing network traffic from the leader node to other nodes in the cluster.

- Compressing the copy of the SQLite database that becomes part of the Raft snapshot. Snapshotting is a critical step in truncating the Raft log, a process that ensures that the log doesn’t grow without limit. This snapshot is written to disk, for restart and recovery purposes.

- No more use of disk for scratch space, during creation of the snapshot. Snapshot-creation now takes place entirely in memory.

- During normal operation rqlite can use either an in-memory (the default) or on-disk SQLite database. However restarting an on-disk node could previously take a long time, because applying changes from the Raft log to an on-disk database is significantly slower than writing to an in-memory database. Now 5.10.0, when running in on-disk mode, first rebuilds the SQLite in memory and only when that is complete does it move the database to disk. This means disk-based restarts are basically as fast as memory-based restarts.

Measuring the impact

While it’s fairly easy to predict the impact the changes above will have, it’s always important to confirm the results. So I ran a simple, 6-hour load test against a single rqlite node, which used an in-memory SQLite database. The load test involved inserting a simple record into a table, over and over again. I used rqbench to perform the writes:

rqbench -o 'CREATE TABLE foo (id INTEGER NOT NULL PRIMARY KEY, name TEXT)' -m 100 -n 1500000 'INSERT INTO foo(name) VALUES("fiona")'

I used InfluxDB, Grafana, and Telegraf (specifically the http plugin) to collect (via the rqlite diagnostics endpoint) and display the results.

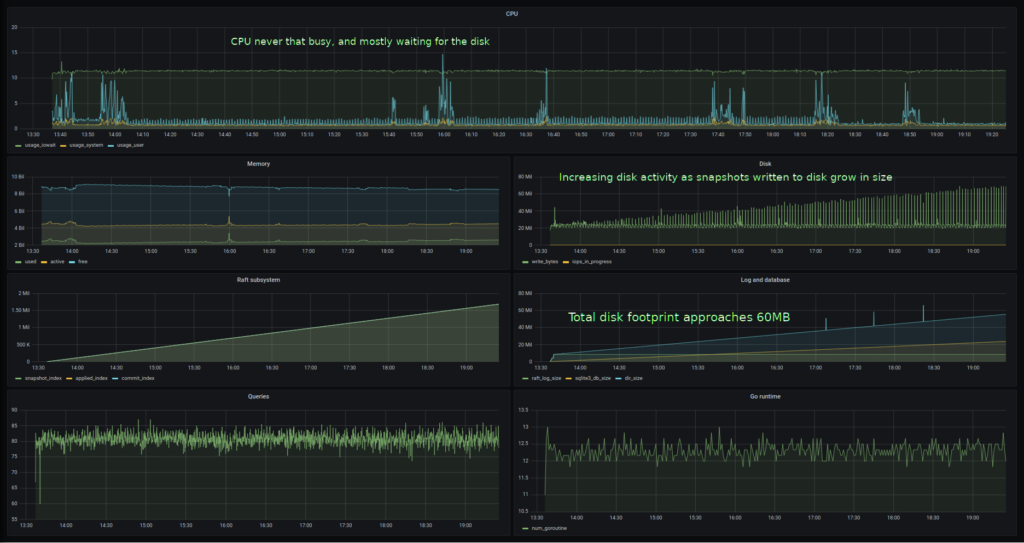

Let’s take a look at 5.6.0 performance first (technically a patched version of 5.6.0 so the required metrics were collected).

Nothing too out of the ordinary. The CPU is never that busy and mostly waiting for IO. The amount of data being written to disk — and rate it is being written — steadily increases however, as the SQLite snapshots grow in size. The Raft subsystem operated normally — committing log entries, and performing a snapshot every couple of minutes or so.

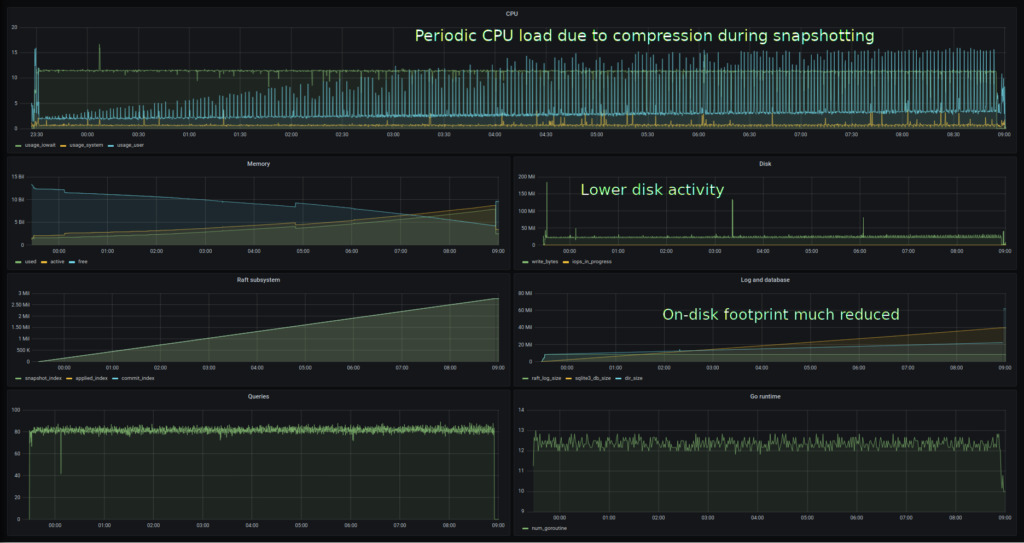

Compare the results of the same test, this time for 5.10.0.

This time disk activity is significantly down, but the trade-off is more CPU activity during the snapshot-compression cycles. This is exactly the trade-off I wanted to make, but it’s good to see that it is actually happening. Note that the disk footprint is much smaller too — it’s only 30% as large for (roughly) the same amount of SQL insertions.

What didn’t change?

The write-performance of rqlite generally hasn’t changed that much between versions, not with these relatively small SQL statements. Mildly disappointing perhaps. However snapshotting should put much less burden on the system, meaning much less disruption to the write-performance during that periodic process. And larger SQL statements should see a much bigger write-performance improvement relative to 5.6.0, as the compression wins should also be bigger.

It’s also good to see that write performance remained steady throughout each test. This wasn’t something I had explicitly tested before, and didn’t receive reports of any issues, but again, it’s good to see.

5.10.0 adds some other small features, as well as some more diagnostics. I also expanded the test suite, as code quality is very important to me. So download it and check it out.