PostgreSQL 9.6 improves synchronous replication and more

| Please consider subscribing to LWN Subscriptions are the lifeblood of LWN.net. If you appreciate this content and would like to see more of it, your subscription will help to ensure that LWN continues to thrive. Please visit this page to join up and keep LWN on the net. |

The PostgreSQL project released version 9.6 on September 29th. This new major release has an assortment of new goodies for PostgreSQL fans, including parallel query and phrase search, new options for synchronous replication, remote query execution using foreign data wrappers, "crosstab" data transformations in psql, and more. Together with version 9.6, the community released a completely rewritten version of the pgAdmin database graphical interface.

The 9.6 release is also notable because it was an on-time release, after two years of increasingly late releases by the project. Version 9.5 was released in January 2016 instead of its target of October 2015. The timeliness of the release is probably due to the project's adoption of a release management team last year.

Despite the short development window, 9.6 is full of interesting features. We'll explore multiple synchronous replicas, foreign data wrapper changes, crosstabs and the new pgAdmin here.

Multiple Synchronous Replicas and Remote Apply

PostgreSQL has had "synchronous replication" (SR) as an option since version 9.1. The "synchronous" part of that refers to the fact that transactions are committed on one or more replicas before a success message is returned to the database client. This guarantees that, if the client saw a commit message, the data is persisted to at least two nodes. Users primarily use this option when data is so valuable that they'd rather have the database reject writes than risk losing them after commit should the database cluster master fail.

Such users are also willing to put up with slower response times due to the doubled network lag. But they don't have to pay this cost with every commit: PostgreSQL allows users to choose synchronous or asynchronous replication on a per-commit basis. If only a minority of the user's data is critical, only that data needs to be copied before commit.

Synchronous replication had two major limitations that have been addressed in version 9.6. One is that SR only supported a single synchronous replica at a time; while multiple replicas could be designated as "candidate replicas," only one of them would be synchronous with the master for any individual commit. This meant that users wanting to guarantee that data was written to at least three locations — sometimes a requirement for valuable data — had no way to do so. Now they do.

In prior versions, you would designate the synchronous replicas for a master server by setting the 'synchronous_standby_names' parameter like so:

synchronous_standby_names = 'sanfran, london, singapore'

What this meant was that the master would attempt to write transactions synchronously to the replica 'sanfran'. If sanfran was offline, then it would write to london. The other replicas would maintain asynchronous replication so that they would be prepared to take over if required. For example, in that cluster you might see this:

postgres=# select application_name as server, state,

sync_priority as priority, sync_state

from pg_stat_replication;

server | state | priority | sync_state

-----------+-----------+----------+------------

sanfran | streaming | 1 | sync

london | streaming | 2 | potential

singapore | streaming | 3 | potential

In version 9.6, you can designate a number of synchronous replicas to target, like so:

synchronous_standby_names = '2 (sanfran, london, singapore)'

This means that both sanfran and london need to acknowledge transactions before they commit. We can see that there are now more than one replica in sync:

server | state | priority | sync_state

-----------+-----------+----------+------------

sanfran | streaming | 1 | sync

london | streaming | 2 | sync

singapore | streaming | 3 | potential

If sanfran or london lose their connection to the master, then singapore becomes synchronous. Now the replicas database view looks like this:

server | state | priority | sync_state

-----------+-----------+----------+------------

london | streaming | 2 | sync

singapore | streaming | 3 | sync

If two servers in that group go offline, the master will stop accepting synchronous writes. Clearly, this is a feature intended for data valuable enough to put up with a lot of overhead to guarantee that no data will be lost.

The other limitation with SR addressed in this release has to do with the consistency of visible data. Previously, while the data was guaranteed to persist on the synchronous replica, that didn't mean that it was necessarily visible if someone queried the replica too soon. The reason that data might not be immediately visible was that the transaction could be written to the transaction log but not applied to the copy of the database in memory due to blocking by other concurrent requests on the replica. This could cause an application that load-balances queries between the master and the replica to fail to read a write it just committed on the master.

PostgreSQL 9.6 fixes this with the remote_apply option for synchronous transactions:

bench=# begin;

BEGIN

bench=# set synchronous_commit = 'remote_apply';

SET

bench=# update pgbench_accounts

set abalance = 100

where aid = 100 and bid = 1;

UPDATE 1

bench=# commit;

This mode does not return to the client until the transaction is visible on all synchronous replicas. This means that a read against a replica even a millisecond later will return consistent data. Of course, this results in longer response times, so the new mode is mostly useful for applications with a low volume of database writes who want to support a write-then-read pattern for load-balancing among read replicas.

The next step for synchronous replication is supporting true quorum replication. This feature, which is likely to be available in PostgreSQL 10 next year, allows a user to specify a group of replicas and, for example, designate that transactions need to be synchronously committed to three out of five of them in no particular order. This will allow users to improve response times over 9.6's prioritized lists of replicas without reducing retention guarantees.

Remote Query Operations

In an ongoing multi-year effort, the PostgreSQL project has been improving its database federation features. Federation is a set of features where a single database server can run query operations against multiple other servers in order to spread out workloads or integrate different applications. In PostgreSQL, federation is accomplished with Foreign Data Wrappers (FDW), a feature based on ANSI SQL standard syntax.

While Postgres's FDW extensions support a multitude of data sources as diverse as MySQL, Redis, and Twitter, the most powerful FDW is the Postgres-to-Postgres extension, postgres_fdw. While postgres_fdw was previously capable of executing searches (scans) on remote servers, sorting data and joins between tables would be executed locally. Now, these can be performed on the remote server as well, a feature known as "push-down".

For example, say that you wanted the top ten accounts from the accounts table on a remote server. You could now grab that and offload all of the work onto that server. You can also join accounts to branches on the remote server, returning only the joined data. First, let's import all of the tables in a remote PostgreSQL database as FDW tables in this database:

CREATE EXTENSION postgres_fdw;

CREATE SERVER bank_server

FOREIGN DATA WRAPPER postgres_fdw

OPTIONS ( host 192.168.1.102, dbname 'accountdb',

use_remote_estimate on );

CREATE USER MAPPING FOR CURRENT_USER

SERVER bank_server

OPTIONS ( name 'acct_user', password 'mxm34dd7i' );

The above commands are the same since version 9.3, except for the new use_remote_estimate option. This option, if the extension supports it, tells the database to estimate the cost of executing queries on the remote server. Next we'll import all tables in the remote database, and run ANALYZE to gather statistics on them:

IMPORT FOREIGN SCHEMA public

FROM SERVER bank_server

INTO SCHEMA public;

ANALYZE;

Now we can join tables on the remote server:

bank=# explain analyze

select branchname, aid, abalance

from accounts

join branches using (bid)

order by abalance desc limit 10;

QUERY PLAN

-----------------------

Limit

-> Sort

Sort Key: pgbench_accounts.abalance DESC

Sort Method: top-N heapsort Memory: 25kB

-> Foreign Scan

Relations: (public.accounts)

INNER JOIN (public.branches)

Planning time: 9.892 ms

Execution time: 497.107 ms

So here the database is showing you that the "Foreign Scan" is being performed on the remotely JOINed relations, and not by retrieving them and JOINing them locally. For a query like the above, this can be several orders of magnitude faster, depending on table sizes and network bandwidth.

For writing data, postgres_fdw previously had the performance issue that it would only delete one row at a time on the remote server. This was often quite slow. Now it can execute UPDATEs and DELETEs entirely on the other server:

bank=# explain delete

from accounts

where bid = 7;

QUERY PLAN

------------------

Delete on pgbench_accounts

-> Foreign Delete on pgbench_accounts

Version 9.6 also includes the ability to execute user-defined functions on remote servers. While these features are currently only available in the postgres_fdw extension, the PostgreSQL community hopes that other FDW extension authors will take advantage of the support for them in the core database engine. For version 10, the developers will be working on remote execution of aggregation and other operations.

Crosstabs in psql

PostgreSQL's command-line database client, psql, has gained another feature that helps users treat it like a complete programming environment. In 9.6, they can now use "crosstabs" in order to manipulate data for copy and paste reporting.

Crosstabs are a feature many people will be familiar with from spreadsheet programs, which sometimes call them "pivot tables." The idea is to take the results of a query, and re-arrange it so that some of the rows become columns. For example, say I had this list of totals for LWN subscribers (all data is fictional):

lwn=# select city, level, count(*)

lwn-# from lwn_subscribers

lwn-# group by city, level;

city | level | count

---------------+--------------+-------

San Francisco | starving | 203

Brisbane | leader | 100

Melbourne | supporter | 35

New York | starving | 204

Portland | starving | 212

...

Now, say I wanted to show the city on the left, and the subscriber level across the top. In version 9.6, I could do this:

lwn=# select city, level, count(*)

lwn-# from lwn_subscribers

lwn-# group by city, level

lwn-# \crosstabview city level count

city | starving | leader | supporter | professional

---------------+----------+--------+-----------+--------------

San Francisco | 203 | 114 | 50 | 266

Brisbane | 198 | 100 | 36 | 255

Melbourne | 96 | 36 | 35 | 113

New York | 204 | 105 | 48 | 271

Portland | 212 | 94 | 50 | 243

Perth | 93 | 46 | 21 | 111

That's a result just a bit of formatting away from a finished report. The PostgreSQL command-line interface also now includes the ability to edit VIEW definitions. Given that the project's developers use psql more often than any other database client, you can expect them to keep adding features to it in every version.

All New pgAdmin

In further database client news, the PostgreSQL project's semi-official graphical user interface, pgAdmin, has undergone three total rewrites over the years. The first two versions, pgAdmin and pgAdmin2, written in different releases of Visual Basic. In 2002, it was re-written in C++ and wxWindows in order to be multi-platform and released as "pgadmin3". The new version, pgAdmin4, has been re-created again, this time in Python, Flask, JavaScript, and Qt.

This means that, for the first time, pgAdmin has both browser-based and desktop versions. Given the decline of desktop client applications among developers, and the popularity of PostgreSQL with the Python community, the current rewrite seems timely.

To use the browser-based version, a user installs the Flask web application on their database server, or on another server that can connect to the database server. Then the user can access and manage their databases using an the graphical interface. For example, here's the "monitoring" view for a server:

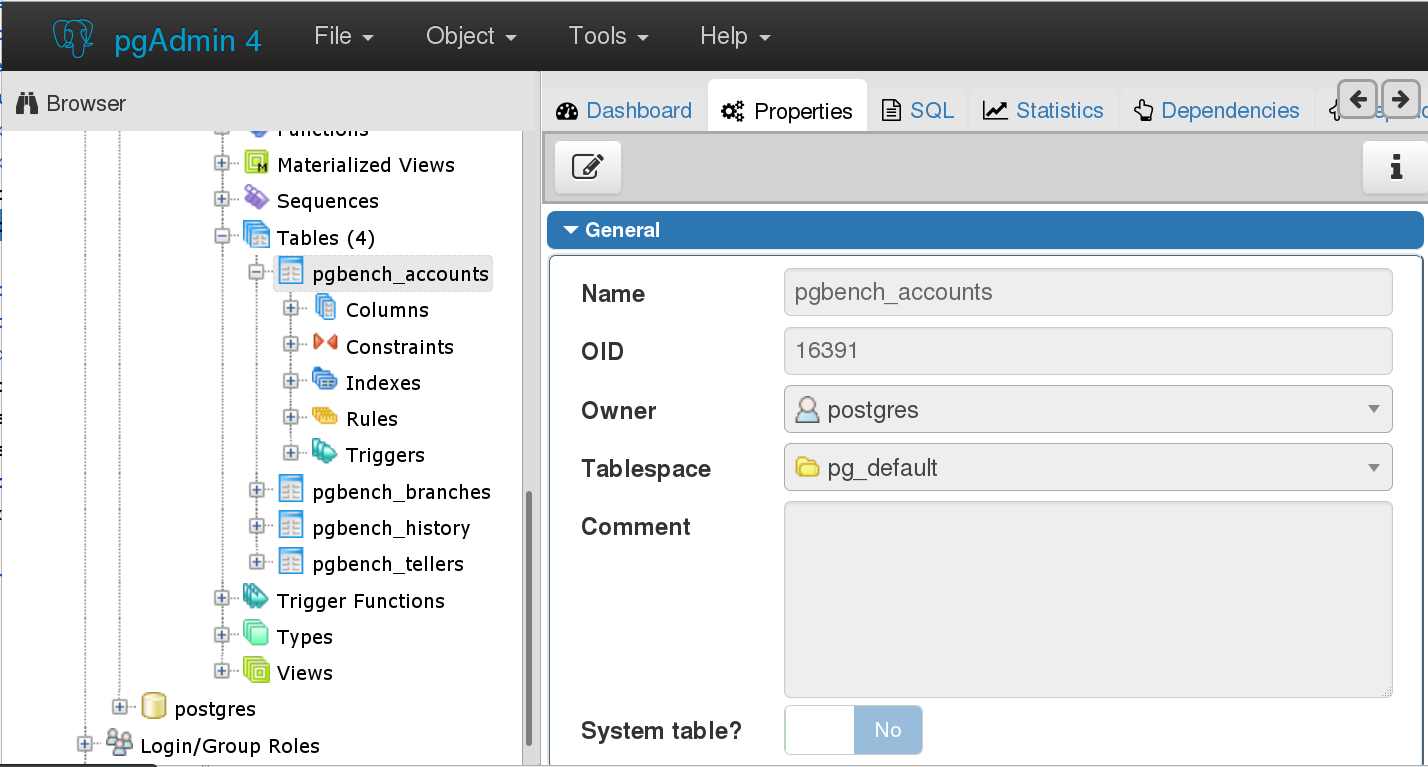

You can also explore and modify database objects:

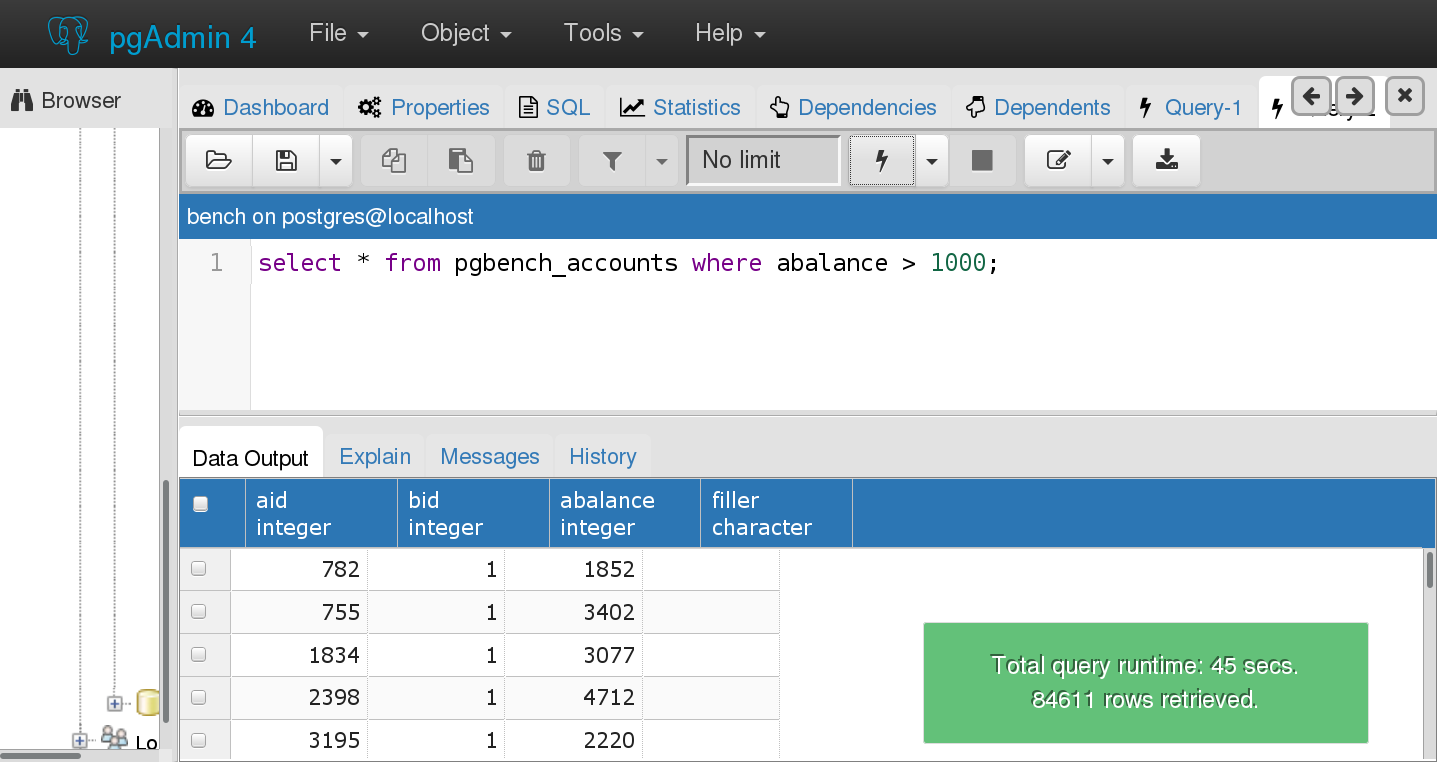

And run SQL queries:

It is a complete database administration tool, covering other activities such as backups, data import/export, and permissions management. While this Flask application could be packaged with each PostgreSQL server, a single pgAdmin node can connect to any PostgreSQL servers on the same network where the user has an administrative login.

The desktop version is based on the same basic Python code, but uses Python-Qt to offer a desktop application for users who prefer that to browser-based interfaces.

On to PostgreSQL 10

Now that PostgreSQL 9.6 is out, the developers have moved on to working on the next version, to be named PostgreSQL 10. This also involves a change in how the project does version numbers. As with other community open source projects, exact feature lists are unpredictable. Some features are under heavy development and look likely for the next version, including:

- The pglogical streaming logical replication tool will allow simpler, more reliable replication between PostgreSQL databases of different major versions. Among other things, this will allow "hot upgrade" via replication.

- Improved hash indexes, making this index type useful in PostgreSQL for the first time.

- Large reductions in write amplification, addressing some of the complaints of former PostgreSQL user Uber.

- A new declarative table partitioning feature will be both easier to use and more performant than the current implementation.

- SCRAM authentication support for much more secure password management and handling.

Regardless of what new features are in the next version, though, PostgreSQL 9.6 has given users plenty of reasons to upgrade.

| Index entries for this article | |

|---|---|

| GuestArticles | Berkus, Josh |

(Log in to post comments)

PostgreSQL 9.6 improves synchronous replication and more

Posted Oct 20, 2016 10:48 UTC (Thu) by Darkmere (subscriber, #53695) [Link]

PostgreSQL 9.6 improves synchronous replication and more

Posted Oct 20, 2016 12:09 UTC (Thu) by niner (subscriber, #26151) [Link]

9.4 contains fixes that make a pg_upgrade much, much, much faster when you have lots of relations. This means that with ~ 150K tables and views a pg_upgrade won't die anymore due to running out of RAM (only 32 GiB available) after several hours, but instead will finish after about 20 minutes with at most 8 GiB RAM used during the process.

This means we can now upgrade to 9.6 at almost any time :)

PostgreSQL 9.6 improves synchronous replication and more

Posted Oct 21, 2016 20:04 UTC (Fri) by jberkus (guest, #55561) [Link]

Mind you, I'm looking forward to Postgres 10 with hot upgrades via logical replication.