5 Hadoop Trends to Watch in 2017

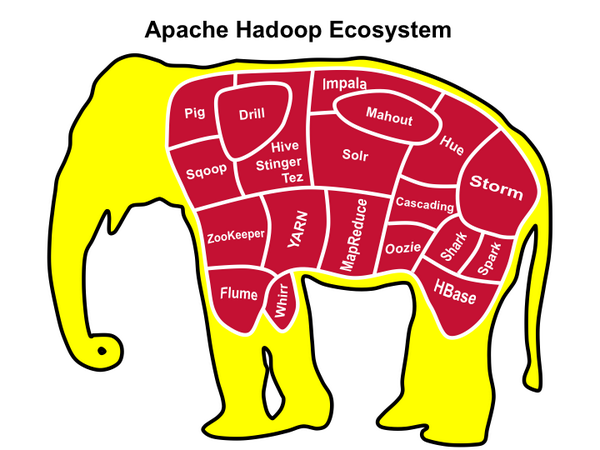

Hadoop is synonymous with big data, providing both storage and processing resources for large and disparate data sources—not to mention a platform for third-party software vendors to build upon. Where will the distributed computing system go in 2017? Here are five macro trends impacting Hadoop to keep an eye out this year.

Later this month, Hadoop will turn 11 years old. That’s an eternity in the IT biz. But will it be enough to slow the spread of adoption?

1. It Ain’t Dead Yet

Rumors of Hadoop’s demise are greatly exaggerated, according to George Corugedo, CTO of data integration software vendor RedPoint Global. “Don’t be a Ha-dope!,” he declares. “For all those folks running around saying Hadoop is dead – they’re dead wrong.”

Corguedo predicts that we’ll see increased adoption of Hadoop in 2017. “So far this year [2016], I haven’t talked to a single organization with a digital data platform who doesn’t see Hadoop at the center of their infrastructure. Hadoop is an assumed part of every modern data architecture and nobody can question the value it brings with its flexibility of data ingestion and its scalable computational power. Hadoop is not going to replace other databases but it will be an essential part of data ingestion in the IoT/digital world.”

Hadoop’s momentum and flexibility will keep it relevant for years to come

There are some numbers to back up the claim that Hadoop usage is expanding, not shrinking. In a recent survey, AtScale, which develops a BI solution for Hadoop, found production deployments of Hadoop expanded from 64% of surveyed organizations in 2015 to 73% in 2016.

If the Hadoop distributors can address the data governance issues and the complexity of Hadoop sub-project integration, it could be a golden year for Hadoop. Kunal Agarwal, the CEO of Hadoop performance software vendor Unravel Data, sees a big year ahead for Hadoop and its in-memory sidekick Spark.

“[In 2016] we saw a complete shift in the way many enterprises viewed Hadoop and Spark,” Agarwal tells Datanami. “What was once thought of as an unstable platform is becoming the cornerstone of any company utilizing big data in their solutions.”

The folks at Infogix are similarly bullish on the yellow elephant. “More and more organizations will be adopting Hadoop and other big data stores, in turn, vendors will rapidly introduce new, innovative Hadoop solutions,” Infogix CEO and President Sumit Nijhawan says.

2. Cloud Gravity Grows

You’ve undoubtedly heard arguments about which Hadoop distributors has the biggest installed base. Is it Cloudera, the oldest and most mature vendor? Or Hortonworks with its close ties to open source? Or the proudly proprietary MapR?

The truth is, they all pale in comparison to Amazon Web Services, which boasts a Hadoop installed based that’s bigger than the pure-play software providers combined. Amazon offers its own version of Apache Hadoop, dubbed Elastic MapReduce (EMR), which runs upon its Elastic Cloud Compute (EC2) infrastructure. And of course, customers are free to run Apache Hadoop, CDH, HDP, and MapR distributions on their own EC2 instances as well.

Cloud-based Hadoop deployments are growing for a number of reasons

Hadoop BI software vendor AtScale sees the cloud looking large in Hadoop’s future. In its recent survey, the company found more than half of respondents have big data solutions living on the cloud today, increasing to nearly three-quarters in the future.

The built-in auto-scaling functionality of cloud-based Hadoop offerings provides a big advantage over traditional Hadoop software that lacks that scalability feature, says Dave Mariani, the CEO of AtScale. “The existing on-premise Hadoop distros (Cloudera, Hortonworks, MapR) will be at a disadvantage compared to the cloud-based ‘Hadoop as a service’ providers like Amazon EMR, Google Dataproc, and Microsoft Azure HD Insight,” he says.

3. Machine Learning Automation

Not all Hadoop users see Hadoop as a platform for executing machine learning algorithms. Plenty of companies are taking advantage of Hadoop’s scalability to create super-sized data warehouses to execute familiar SQL statements for traditional ad-hoc and BI reporting purposes, using distributed SQL engines like Hive, Impala, Presto, and Spark SQL.

But you can’t deny the allure of Hadoop as a platform for ML. If the big data trend is all about finding valuable insights hidden in vast amounts of varied data, and then finding a way to act upon those insights in a predictive fashion to get ahead of the game, then the practice of training and scoring models using machine learning algorithms would have to be considered de rigueur for many Hadoop deployments.

But machine learning is difficult and typically requires the expertise of data scientist—or more likely, a team of skilled data scientists working in conjunction with experienced data engineers. That’s not the type of investment a CFO takes lightly. It also presents a ripe opportunity for automation through software.

We’ve been hearing about machine learning automation for years, but signs of a breakthrough coming in 2017 are hard to miss.

“Given the shortage of data scientists and the continuing growth of data, many are pointing to machine learning and automation as means to scale analytics efforts,” Adam Wilson, CEO of Trifacta, tells Datanami. “Machine learning and automation will become more effective in 2017, but will not yet equal the hype. Enterprises will realize that machine learning is best used to augment humans and make them more effective, not replace them.”

Toufic Boubez, VP Engineering for machine data intelligence vendor Splunk, sees machine learning becoming more accessible to a broader user base and applied more broadly in 2017.

“Machine learning capabilities will start infiltrating enterprise applications, and advanced applications will provide suggestions — if not answers — and provide intelligent workflows based on data and real-time user feedback,” he says. ” This will allow business experts to benefit from customized machine learning without having to be machine learning experts.”

4. Data Governance and Security

Companies are starting to realize that the business and security risks of having a poor data governance strategy are simply too great. Data may be the new oil, but oil leaks can be disasters for people around them. That will put the twin topics of data govern menace and security front and center for companies with big data initiatives in 2017—and in particular those building big data solutions on top of Hadoop.

Steve Wilkes, CEO of Striim, says enterprises will need to take a hard look at the security of hteir Hadoop-based data lakes. “Current practices that dump raw log files with unknown and potentially sensitive information into Hadoop will be replaced by systematic data classification, encryption and obfuscation of all long-term data storage,” he predicts.

The Hadoop community is moving to address its “junk drawer” problem

“Hadoop deployments and use cases are no longer predominantly experimental,” says Oracle Group Vice President for Big Data Balaji Thiagarajan. “Increasingly, they’re business-critical to organizations like yours. As such, Hadoop security is nonoptional.”

John Schroeder, executive chairman and founder of MapR Technologies, says the best companies will bifurcate their data projects around two general approaches: one focused on regulated data streams, and the other focused on non-regulated use cases.

“Regulated use cases data require governance; data quality and lineage so a regulatory body can report and track data through all transformations to originating source,” Schroeder says. “This is mandatory and necessary but limiting for non-regulatory use cases like customer 360 or offer serving where higher cardinality, real-time and a mix of structured and unstructured yields more effective results.

We saw data governance as a big data discipline gain steam in 2016, and that momentum will carry over into 2017 as companies continue to hash out the major obstacles preventing them from efficiently capitalizing on their data. Venky Ganti, the CTO of data governance solution provider Alation, says companies will need to contain the creeping sprawl if they’re going to succeed.

“Self-service analytics technologies have put analysis into the hands of more users and as a byproduct, led to the creation of derivative artifacts: additional datasets and reports, think Tableau workbooks and Excel spreadsheets,” Ganti says. “These artifacts have taken on a life of their own. In 2017, we will see a set of technologies begin to emerge to help organize these self-service data sets and manage data sprawl. These technologies will combine automation and encourage organic understanding, guided by well thought-out, but broadly applicable policies.

5. Data Fabrics Spreading

The movement of big data is often associated with water. Indeed, the big data ecosystem is replete with watery imagery. Data streams flow into data lakes and reservoirs with the help of products like Flume, Cascading, and MillWheel.

The data fabric concept stands to unite important aspects of data management, security, and self-service aspect in Hadoop and other big data platforms (agsandrew/Shutterstock)

But in the future, we may think of big data in other terms, such as fabric. In fact, that thread is starting to take hold among industry analysts like Forrester, which recently issued a report on the leading data fabric vendors, including Trifacta, Paxata, Informatica, Talend, IBM, Syncsort, SAP, Waterline Data, Oracle, Global IDs, and Denodo Technologies.

Forrester analyst Noel Yuhanna says the big data fabric market is small but growing rapidly. “Enterprises of all types and sizes are embracing big data, but the gap between business expectations and the challenges of supporting big data technology (such as Hadoop) has become the primary motivation to innovate with big data fabric,” Yuhanna writes. “The collection of technologies enables enterprise architects to integrate, secure, and govern various data sources through automation, simplification, and self services capabilities.”

What are you plans for Hadoop in 2017? Drop us a line with your Hadoop thoughts at editor(at)datanami(dot)com.

Related Items:

What 2017 Will Bring: 10 More Big Data Predictions

Big Data Speaks: 10 Industry Predictions for 2017